Aligning Estimands, Identification, and Conclusions

Presented at the Causal Data Science Meeting (with Graham Goff).

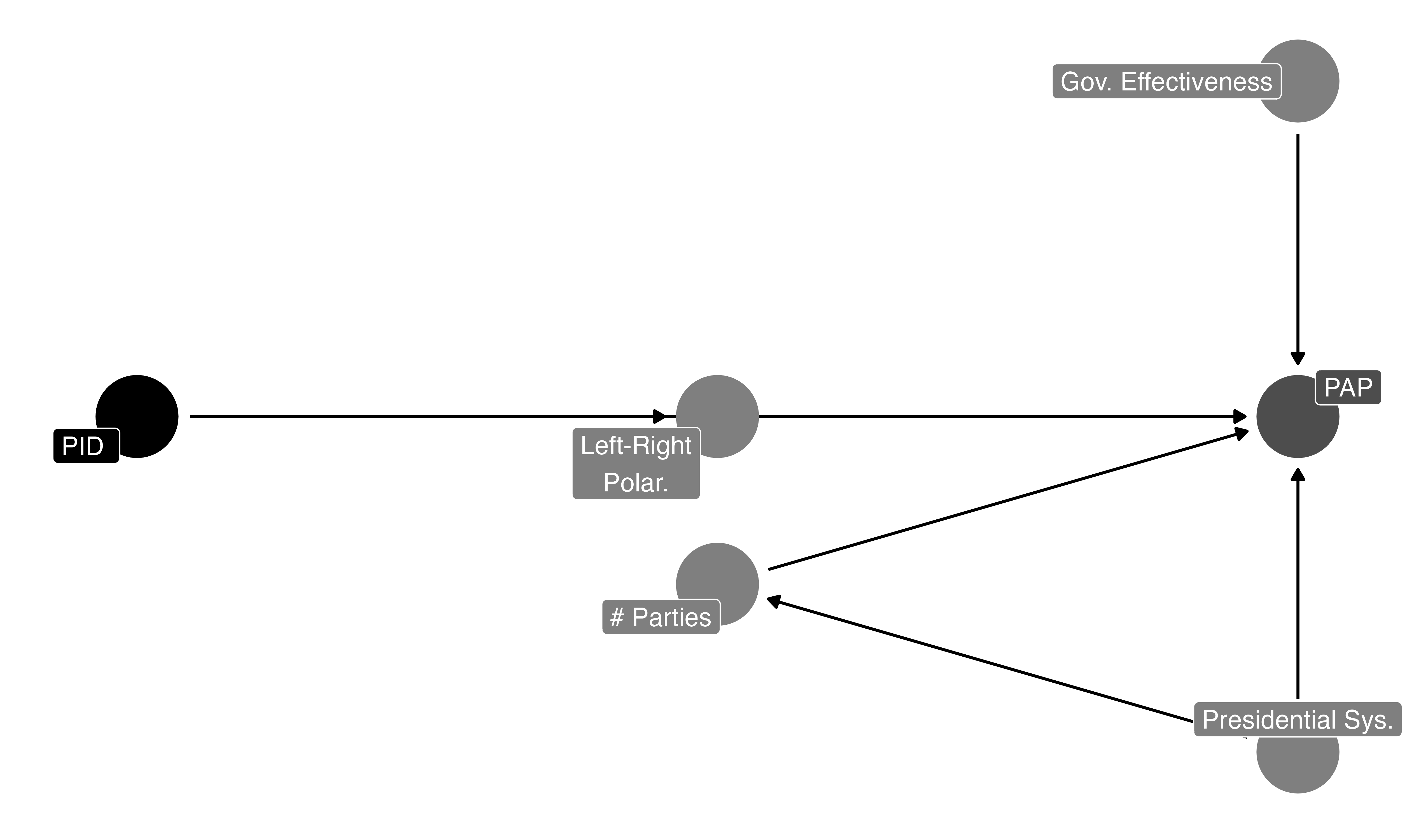

Regressions with control variables underpin observational social science, but control variable selection can change the estimands and inferences that models support. In this paper, we introduce a workflow that combines Directed Acyclic Graphs (DAGs) with automated empirical estimation to recover target estimands. To illustrate its utility, we re-examine all 2024 American Political Science Review (APSR) articles using observational regressions with controls. Incorporating author feedback on our DAGs, we find no colliders. However, 61% of articles include estimand-shifting mediators or their descendants, and 21% of articles interpret them as total effects without qualification. Removing DAG-inconsistent variables also meaningfully alters standard errors, coefficient magnitudes, and statistical significance. Reweighting to recover target estimands not only yields similar patterns for coefficient magnitudes and statistical significance but also some positivity failures. Our results suggest that diagnosing assumptions before drawing conclusions with our R package, DAGassist, can improve empirical practice.